RAG Output Validation

Part 1: RAGAs for Quantitative Validation

Iñaki Peeters

AI Solutions Analyst

As we know by now, building a basic Proof-of-Concept RAG system is not overly difficult, however, scaling the PoC to an operational business solution, integrated in the daily workflow of its users, is more complex. Besides the obvious data quality requirements and robust interface, both needed before deploying a production-ready version of your RAG system, you want to make sure that you built an effective system that eventually provides you with the right answers. To do so, output validation is a crucial, but challenging aspect of RAG.

At Faktion, we developed a custom framework to facilitate RAG output validation, allowing to set up a high-performing, scalable RAG system.

Defining What the Right Answer is

But what is considered a right answer? Valid question, since we can think about a lot of different facets to the word right, each of which we want to see fulfilled in our RAG output. Just to name some of the possibilities:

-

Accuracy and factuality: the output should be accurate and factually correct. It should be based on current knowledge and available data, reflecting correct information.

-

Relevance: the output should cover all aspects of the query, but no more than that. The output should not include unrelated subjects or deviate from the intended purpose of the query.

-

Completeness: on the other hand, the output should be complete and cover all parts of the query without leaving essential aspects unaddressed.

-

Coherence: the output should follow a logical and human-like structure, making it readable.

-

Clarity and conciseness: the output should be clear and to-the-point, avoiding overly complex phrasing.

-

Contextual appropriateness: the output should be appropriate with regards to the context of the query, following the user’s intent.

This list is probably not complete, there are a lot of other aspects that might be added to the list, including some of the lesser-known characteristics, which might be equally important for some use cases, including specific writing styles, source citation, cost efficiency, or even maliciousness or controversiality.

Why is RAG Output Validation so Difficult?

Unlike classic machine learning problems with a numerical output, the output of question answering systems takes the form of unstructured textual data. Straightforward evaluation metrics, like precision, recall, F1-score, accuracy, and many more won’t do the trick on their own in this situation. Traditional question answering systems have two components, the question and the answer, but RAG systems also introduce context (retrieved information). This makes RAG even harder to evaluate as compared to the traditional question answering systems. The answer being correct is not enough, the context should also be right.

To properly evaluate RAG systems, both quantitative and qualitative output validation is crucial. Traditional text generation tasks were often validated using common metrics like

-

BLEU (BiLingual Evaluation Understudy)

-

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

-

METEOR (Metric for Evaluation of Translation with Explicit ORdering)

Now with the recent rise of LLMs, the output validation scene has altered significantly. Whereas the above metrics are still valuable today for tasks like translation, in the context of question answering and summarisation with LLMs and RAG system, they have become less relevant.

Output validation for RAG goes a step beyond output validation for text generation processes. RAG systems must not only be validated on the textual output generated, rather, they should be validated on retrieval, generated output and the overall combination of both (the end-to-end pipeline). It is crucial to quantify if and to what extent the retrieval component of the RAG systems is able to find the right pieces of information to feed to the generation component. Solely validating the generated output would be too narrow as the user cannot track the root cause of suboptimal performance: poor retrieval or poor generation.

Framework for Validating RAG Performance

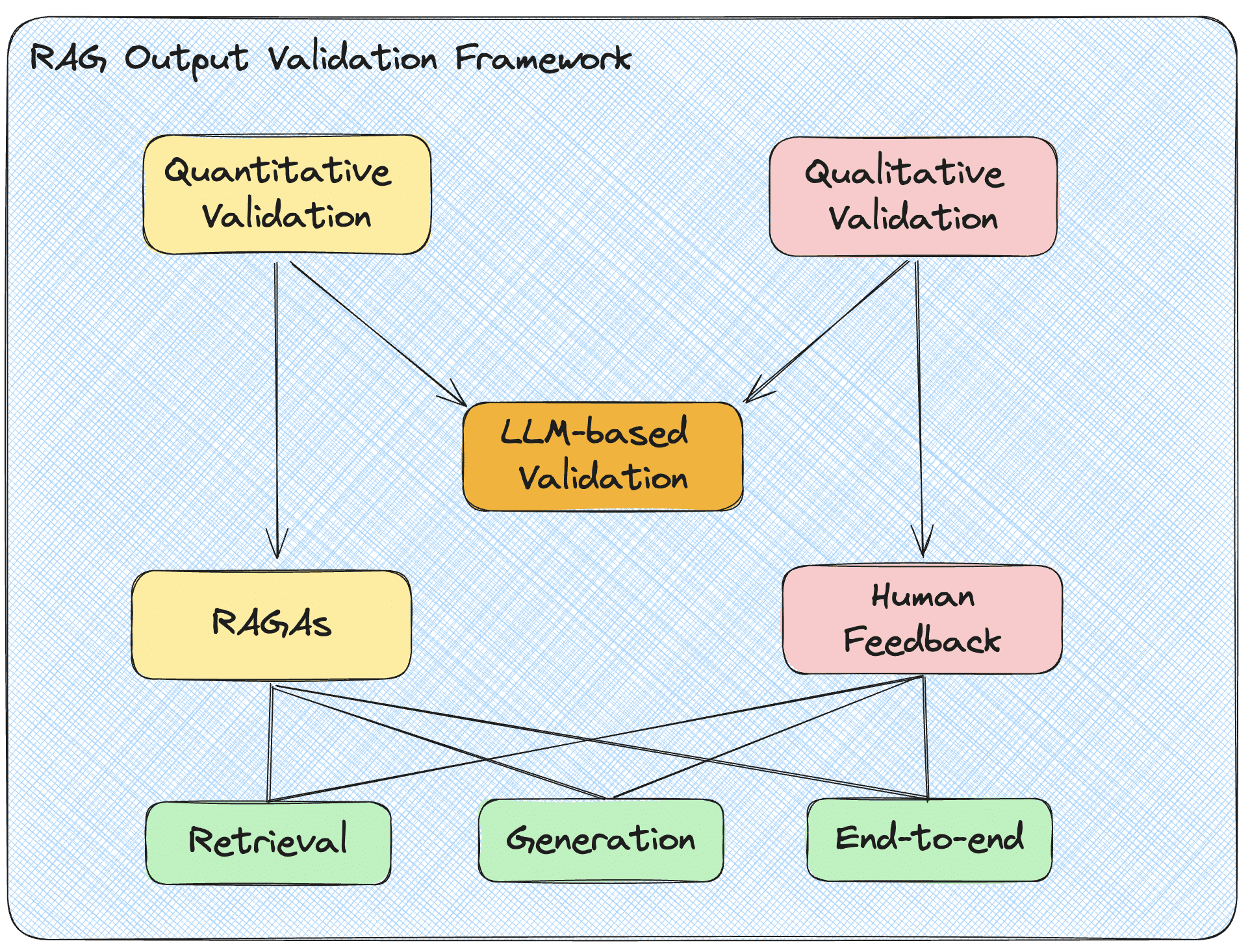

Luckily, there are frameworks available that facilitate output validation of RAG systems. One of the most promising frameworks is ‘RAGAs’ (Retrieval-Augmented Generation Assessment), providing a range of performance metrics to evaluate information or context retrieval, output generation, and the end-to-end RAG pipeline. Often, frameworks like RAGAs focus on quantitative validation, ignoring qualitative validation for a bit. Since qualitative validation does hold significant value, we propose an extended output validation framework, as can be seen from the figure below.

The framework contains both quantitative and qualitative output validation facets. Broadly speaking, three building blocks can be distinguished. At the quantitative output validation side, the RAGAs framework will be used to validate retrieval, generation, and the end-to-end pipeline. On the qualitative side, human validation will be used to qualitatively assess the output of the RAG system. In between, LLM-based validation is used as a combination of quantitative and qualitative validation. The framework takes four different pieces of information as an input:

-

Question (Q): the query provided by the user of the RAG system.

-

Context (C): the information retrieved by the RAG system.

-

Answer (A): the output provided by the RAG system, based on the retrieved context.

-

Ground Truth (GT): the true and ideal answer to the query. Think of it as an established, ‘golden batch’ answer to your question, satisfying all requirements needed for the right answer.

Building Block 1: RAGAs framework

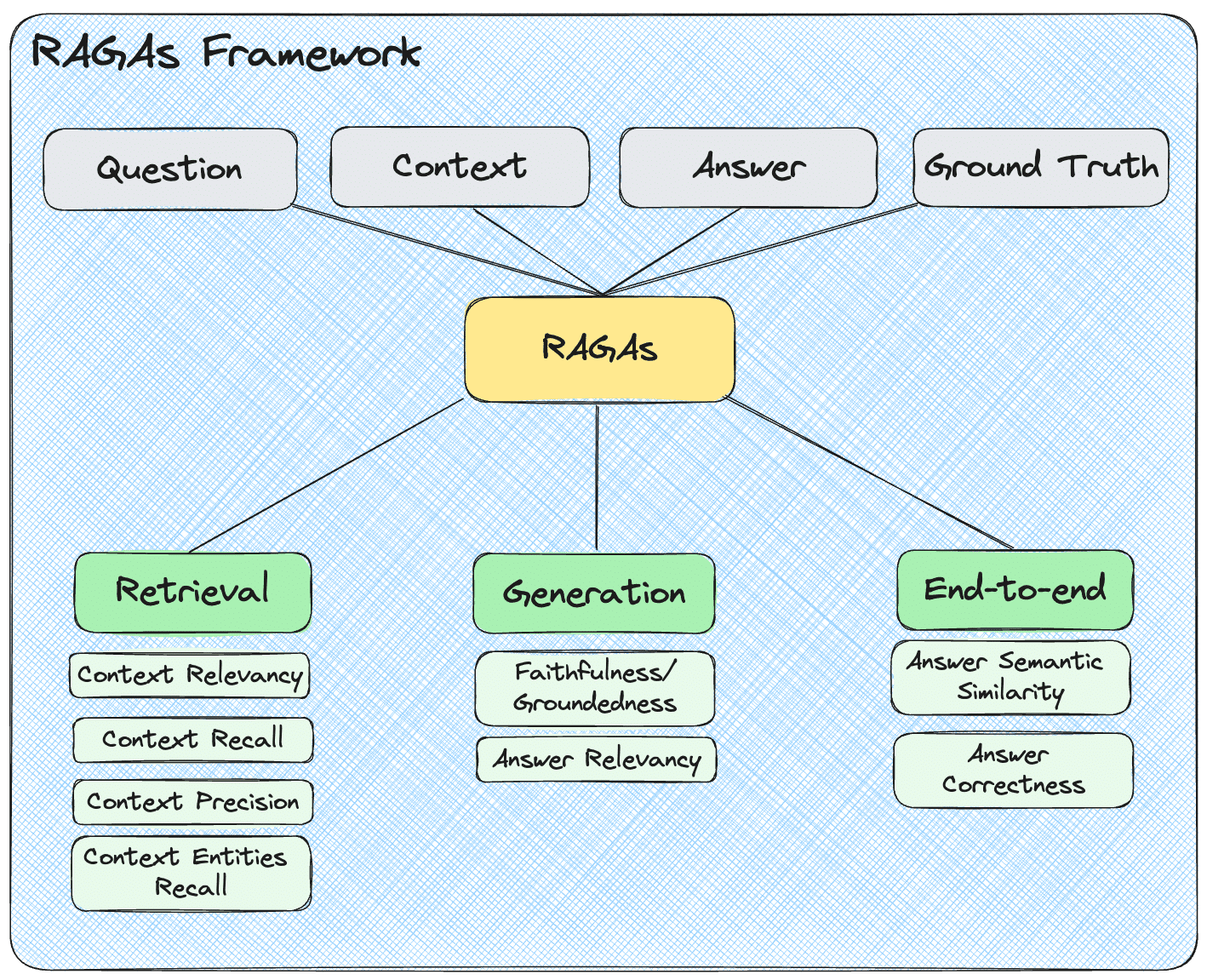

The RAGAs framework validates both the retrieval and output component of the RAG system while also providing performance metrics to evaluate the end-to-end RAG pipeline. An overview of the RAGAs framework is provided in the figure below.

For retrieval validation, RAGAs offers the following metrics, with the respective inputs for the metric between brackets:

-

Context relevancy (Q and C): measures the extent to which the retrieved context is relevant and useful for answering the query.

-

Context recall (GT and C): measures the extent to which the system is able to retrieve all essential information relevant to the query from its knowledge base, ensuring no critical details are missed.

-

Context precision (Q and C): evaluates how accurately the retrieved information matches the query, focusing on the relevance and quality of the retrieved information. In a sense, it measures the signal-to-noise ratio of the retrieved context.

-

Context entities recall (GT and C): measures the effectiveness of the system in capturing all relevant entities from the knowledge base.

In terms of generated output validation, the following metrics are available:

-

Faithfulness or groundedness (C, A, and Q): assesses the degree to which the generates response is factually accurate and supported by the retrieved context, without introducing ungrounded elements.

-

Answer relevancy (Q and A): measures how closely a generated answer aligns with the intent and specifications of the query, focusing on completeness and pertinence.

To validate the end-to-end RAG pipeline, encompassing both the information retrieval and output generation, RAGAs offers the following metrics:

-

Answer semantic similarity (GT and A): measures the semantic alignment between the generated answer and the ground truth, evaluating how well the meanings correspond.

-

Answer correctness (GT and A): gauges the accuracy of the generated answer compared to the ground truth, evaluating both the factual and contextual correctness.

(sources: RAGAs paper and Github repository)

What About the Ground Truth?

As discussed above, the ground truth is an important asset for several evaluation metrics. But where do we get this ground truth? The output of the RAG system has to be compared with a ground truth dataset to validate its performance, but these datasets are not readily available within companies. Broadly speaking, there are two ways to establish a ground truth dataset. On the one hand, the ground truth can be provided by a domain expert that meticulously crafts the perfect answers to queries. Obviously, this manual approach is time-consuming and labour-intensive. On the other hand, LLMs can be used to synthetically generate the ground truth datasets. In the next blog post, we’ll dive deeper into the different ways to create a ground truth dataset, together with the associated challenges and implications. Stay tuned!

Part 1: RAGAs for Quantitative Validation