Why data has become a strategic asset for your business

Julian, Jeroen & Maarten

ML & Business Development

What is data-centric AI and why does it matter?

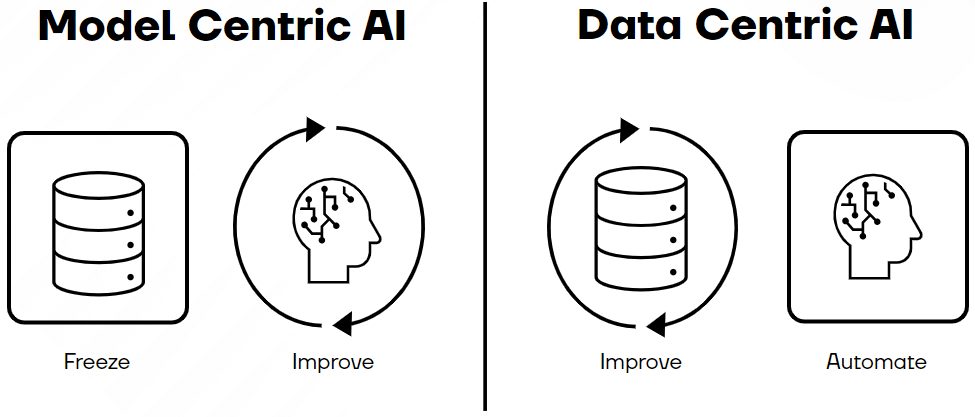

Historically speaking, Artificial Intelligence (AI) has focused much on the algorithmic aspect, as large amounts of data were not readily available in the early days. However, the digital transformation of our world has ushered an era of data abundance. Today, data is everywhere, and modern AI systems (particularly deep learning) are trained using huge datasets to solve complex tasks. Undertaking an AI project without any data is impossible, but you might not always need as much data as you would assume. AI pioneers such as Andrew Ng (Founder & CEO of Landing AI) believe that companies need to move from a model-centric approach to a data-centric approach. Instead of focusing on the code and algorithms, companies should focus on developing engineering practices for creating and improving their data in ways that are reliable, efficient, and systematic. Gone are the days when data was considered static; a dynamic perspective has emerged among AI practitioners.

Data-centric AI (DCAI) recognizes that the foundation of successful AI projects lies in the data itself. Companies are painfully starting to realize that if they want to create real value with AI, they need enough qualitative data to fuel the models. The accuracy and reliability depend heavily on the quantity and quality of the data they are trained on - garbage in, garbage out. Even the most sophisticated algorithms will produce unreliable results if the underlying data is flawed, incomplete, or biased.

This means that your value creation with AI will depend on how well you take care of your enterprise data; this is your secret sauce, your unique differentiator in the realm of AI. Models will continue to improve incrementally, however, how you acquire and govern your data assets makes the difference.

How Faktion applies a data-centric approach in developing custom AI solutions

Over the past 7 years, Faktion has collaborated with numerous clients across various industries to develop and implement custom AI solutions aimed at enhancing operational efficiency. In every project, we start with the customer's unique needs and priorities and work our way to the data and technology. Throughout our journey, we've encountered a multitude of challenges and setbacks, primarily stemming from issues related to the quantity and quality of the provided datasets by our clients. The issues didn't lie in the availability or performance of algorithms, it often boiled down to the lack of high-quality data as a fuel for the models. While AI models have improved and have become more available in recent years, companies are now realizing that they will not unleash their full potential with messy, scattered, and low-quality data.

Data-centric AI is the discipline of systematically engineering the data used to build an AI solution or system. Starting from your needs and priorities, you should work your way to the data prerequisites. By adopting a data-centric approach, we have seen significant improvements in the development, deployment, and performance of our AI-based solutions. In what follows, we explain how we apply data-centric techniques to deliver based on different use cases and data types. Remember, data-centric AI is an approach that isn't specific to a particular industry. It is a perspective that involves a set of engineering practices that you can apply for improving data in ways that are reliable and efficient.

Visual Damage Detection:

Synthetic data generation for a computer vision application

One of our Dutch clients specializes in the design and manufacturing of sustainable solutions for air cargo. When they approached us to develop an intelligent solution to improve the process of inspecting these Unit Loading Devices (ULDs), we were all ears. The inspection process of ULDs is quite stringent and time-consuming. Before every flight, they must be inspected for defects and damage (dents, scratches, etc.). Our client was looking to develop a solution that could automatically detect and classify the type of damage, as well as recommend the next best action (e.g., ready to fly, needs repair). At the time, the inspection process would be performed manually by a human inspector, which was prone to error.

Our initial idea was to train a supervised model with the defective data they had already captured and labelled. However, it quickly became clear that the data was qualitative but insufficient in quantity to train a supervised model with the desired performance. We applied synthetic data techniques by using 3D CAD programs and game development engines, hereby successfully engineering a dataset to train the supervised model. This data-centric approach enabled us to create an appropriate dataset and build a solution.

The encoder-decoder architecture: Learning normal data patterns and highlight deviations through reconstruction errors

Think of an encoder-decoder like a superhero with X-ray vision. This hero can look at objects and see their hidden flaws. When they look at something, they can reduce the image by stripping away the normal parts and focus only on what's unusual or different. It's like they're wearing special glasses that highlight the strange stuff. Later, they can use a spell to bring back the normal parts and show you the whole object again. So, you see the object as it should be, but with any anomalies glowing brightly.

The encoder-decoder architecture is a prevalent model in deep learning, especially for sequence-to-sequence tasks, but they can also fill in missing data points and help reduce noise. The encoder processes input data into a context vector (a simpler form of the data), which the decoder then uses to generate output data.

Data-centric AI emphasizes the pivotal role of data quality and processing in achieving model accuracy. For encoder-decoder models to excel, they require high-quality, accurately annotated training data. In data-centric AI, encoder-decoders can produce additional synthetic data, increasing dataset variety, and ensuring overall data quality. They also assist in standardizing data from different sources and can identify and correct data inconsistencies. By reducing data dimensions, they simplify feature learning. These architectures detect anomalies by noting high reconstruction errors, which leads to better data management in AI projects.

Moreover, data-centric approaches might involve data augmentation or using domain-specific datasets to enhance model robustness. Error analysis in a data-centric paradigm can identify and rectify problematic input sequences. In essence, while encoder-decoder offers a solution for sequence tasks, data-centric AI ensures the data feeding is optimal.

Predictive maintenance for new equipment: Introducing synthetic anomalies in a time series

Detecting defects, such as damage in the case of our Dutch ULD manufacturer, is a well-studied challenge and a common use case for industrials. But what if you want to detect anomalies that have never occurred before? For instance, when new equipment/machines are put into production and failures have yet to emerge. Rather than waiting for things to break, we want to be proactive and create anomalous data ourselves. This is exactly what we did with a client who produces wind turbine parts – leveraging synthetic, anomalous data to prevent defects and failures before they first occur. This ingenious technique enabled us to assemble a dataset and proceed with the project instead of waiting to for downtime or defects to emerge.

Tagging product data with an NLP-based AI system

As stated before, the challenges faced with DCAI aren't only related to the volume of data but are also linked to its quality, especially when dealing with large datasets. Recently, SmartWithFood (SWF), a Colruyt Group spin-off, came to us with a challenge regarding the onboarding of new retailers on their platform. With their platform, SWF leverages the available product data from retailers to offer services to their users, such as recipe-to-basket. During the onboarding phase, the retailer's product data has to be ingested into SWF's database, but to ensure the quality of these data as well as standardization in their database, domain experts (dieticians in this case) had to manually process the data and place the product properties in a graph. This was a manual and time-consuming task and distracted the dieticians from doing their core and value-adding activities. By automating these pre-processing tasks and manual actions by the domain experts, we achieved a ‘reliable automation’ of over 70% coverage over all categories and subcategories at 98% accuracy for the main service levels. This reduces the average onboarding time of a new client from months to just a week.

Faktion's Intelligent Data Quality Optimization (IDQO) Toolbox

Our IDQO toolbox is an AutoML toolbox that enables companies to streamline 'data quality tasks' (e.g., classification, matching, enrichment, validation, clustering, similarity search, etc.), reducing the need for domain expertise (remember the dieticians in the SWF case). We envision IDQO as reusable building blocks that we can easily apply depending on the specific needs. Through our collaboration with clients in various industries (Insurance, Banking, Retail, Wholesale, FMCG, HR & payroll), we have noticed that there is a lot of time spent on managing (manual) tasks related to data quality and governance. With our approach, we want to give data stewards and data teams the necessary tools to 'model' the knowledge of domain experts and bring data quality knowledge to the enterprise level using Domain Knowledge Models.

Why do your unique data assets make a difference?

Data determines the output quality of your AI systems, and by extension, the value of your company. Regardless of your industry, we would recommend any business to take good care of proprietary data. Based on your business objectives and priorities, you should start out with a suitable data strategy and be thoughtful about data acquisition and data quality assurance. Throughout our journey in AI and collaboration with our clients, we have encountered several setbacks related to data quantity and quality. As discussed in this blog, we were able to tackle some of these challenges with ingenious, data-centric techniques such as synthetic data generation. In other cases, setbacks related to data have slowed us down or in the worst case, hindered us from proceeding with the project until we had created an appropriate dataset. Your data assets will determine your success with AI, so don't let data deficiencies get in the way of creating value today.