Prompt Flow in Azure ML: exploring new features

Part 2: A streamlined way to build LLM-based AI applications with RAG

Ben Burtenshaw

NLP Engineer

In this post, we will delve into the technical aspects of developing and deploying RAG systems using Azure ML Prompt Flow. We will cover the basics of RAG, its benefits for LLM-based AI apps, and the steps involved in building and deploying RAG systems with Azure ML Prompt Flow 🤖.

What is Retrieval-Augmented Generation (RAG)?

RAG systems are an application architecture that combines LLMs with external data sources to enhance their responses. This makes RAG systems ideal for applications that require access to background knowledge, such as chatbots, recommendation systems, and question-answering systems.

RAG systems work by first retrieving relevant information from the external database. This information is then supplied to the LLM as context for a given query. This approach has several advantages over using LLMs alone.

Why use RAG for LLM-based AI applications?

RAG systems offer a number of benefits for LLM-based AI applications, including:

Improved performance: RAG systems can provide more accurate and precise responses than LLMs alone because they are supplied with more relevant information. This is important for applications such as chatbots and question-answering systems, where users expect a relevant context to their answers.

Reduced development effort: RAG systems can make it easier to develop LLM-based AI applications, as they do not require machine learning modelling.

Increased scalability: RAG systems can scale to handle large databases and complex queries, which is important for applications such as recommendation systems and search engines.

Reduced hallucination: RAG systems are less likely to hallucinate or produce inaccurate or nonexistent information than LLMs alone, when they are supplied with relevant information.

Retrieving vs Finetuning

In the NLP world, pre-trained models like the GPT series are versatile general models that can perform many tasks from rap music creation to cookery advice. Retrieval-augmented generation (RAG) systems pull real-time data from external sources to enhance responses, an alternative approach is fine-tuning. Where, for example, the LLM is adapted for a specific task or domain to increase performance.

Fine-tuning involves adapting a pre-trained model to a specific task using a smaller, task-specific dataset. This method allows the model to specialize without the computational burden of retraining from scratch. In contrast to RAG, which dynamically retrieves information, fine-tuning optimizes the model’s internal knowledge for a particular domain. It’s a more static approach but can be effective, especially when the task-specific nuances are critical.

When implementing retrieval-based systems, it’s important to remember that the model’s understanding of the context has not been altered. Therefore, a model may not handle the context information as expected. Therefore, the retrieval vs. finetuning challenge could be seen as one of freshness of knowledge vs. depth of knowledge.

Build and deploy RAG systems with Azure ML Prompt Flow

Azure ML Prompt Flow provides a number of features that make it easy to build and deploy RAG systems, including:

Pre-built components: Azure ML Prompt Flow provides several pre-built components for working with RAG systems, such as components for retrieving data from external databases and generating responses using RAG models.

Visualized flows: Azure ML Prompt Flow provides a visual interface for designing and developing RAG systems. This makes it easy to create and manage complex workflows, even for users without programming experience.

Real-time endpoint deployment: Azure ML Prompt Flow allows you to deploy RAG systems as real-time endpoints. This means that you can make your RAG systems available to users immediately without worrying about managing infrastructure or scaling.

Technical Considerations

When building and deploying RAG systems with Azure ML Prompt Flow, there are a few technical considerations to keep in mind:

Data quality: The quality of the external database significantly impacts the quality of the RAG system’s responses. It is important to make sure that the database is well-curated and that the information is accurate and up-to-date.

Latency: Real-time data retrieval can lead to latency, especially for large databases or complex queries. It is important to consider the latency trade-offs when choosing the right approach for your application.

Scalability: RAG systems can scale to handle large databases and complex queries. However, it is important to design your system carefully and to choose the right Azure resources to ensure that it can scale to meet demands.

Conclusion

Azure ML Prompt Flow is a powerful tool that can be used to build and deploy RAG systems in a streamlined and efficient manner. In this part of the blog post series, we have introduced the technical considerations of building and deploying RAG systems with Azure ML Prompt Flow. Below, we provide a hands-on tutorial on how to build and deploy a RAG chatbot using Azure ML Prompt Flow. Microsoft Learn contains a range of tutorials for RAG use cases.

Hand-on Tutorial: RAG with AzureML PromptFlow

With tools like AzureML and FAISS, creating a robust system for such tasks has become more accessible. In this tutorial, we’ll guide you through grounding a database into a LangChain-compatible FAISS Vector Index using AzureML. By the end, you’ll have a streamlined prompt flow that leverages this index, ready to serve as a DBCopilot chatbot.

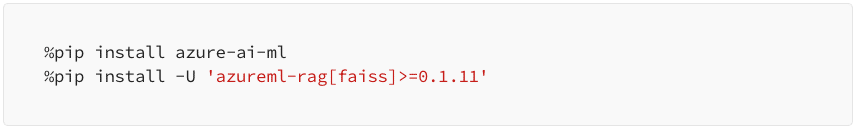

Step 1: Setting Up Your Environment

Before starting the RAG setup, ensure you have the necessary packages installed. If you’re working within an Azure notebook, you can use the following commands:

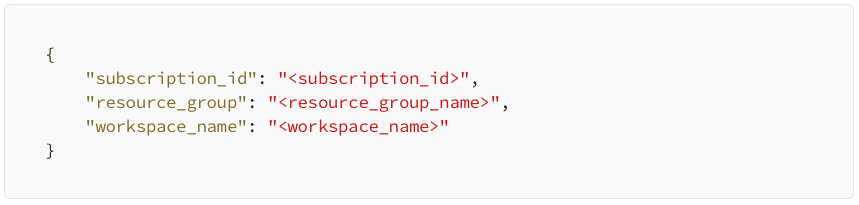

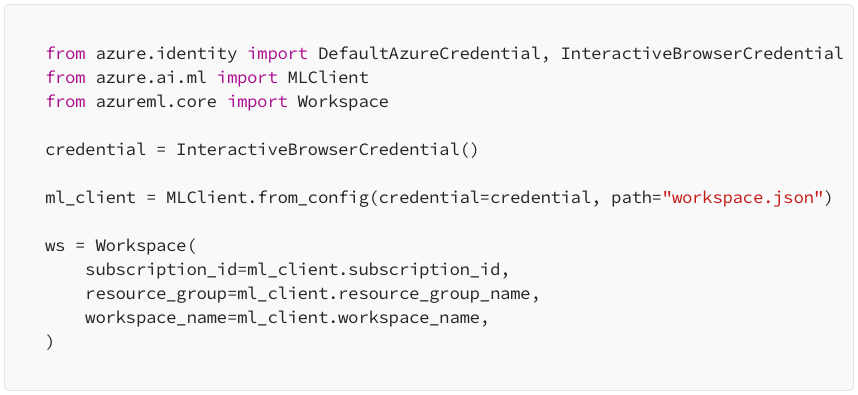

Step 2: Configuring the Azure Machine Learning Workspace

The Azure Machine Learning workspace is a top-level resource that provides a centralized place to work with all the artifacts you create. To connect to your workspace:

Enter your Workspace details, which will write a workspace.json file to your current folder.

Use the MLClient to interact with AzureML.

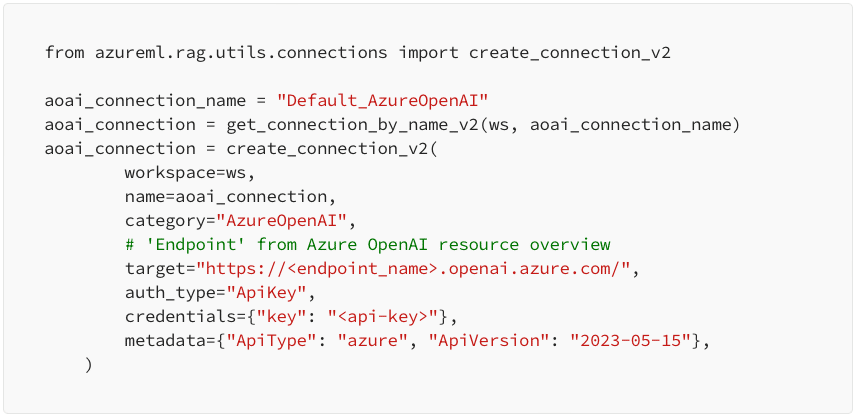

Step 3: Setting Up Azure OpenAI

For optimal Q&A performance, it’s recommended to use the gpt-35-turbomodel. There are detailed instructions here. To set up an Azure OpenAI instance:

- Follow the instructions to set up an Azure OpenAI Instance and deploy the model.

- Use the automatically created

Default_AzureOpenAIconnection or create your own.

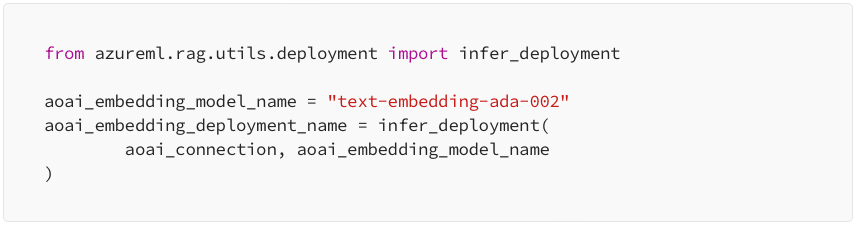

Deploy the embedding service:

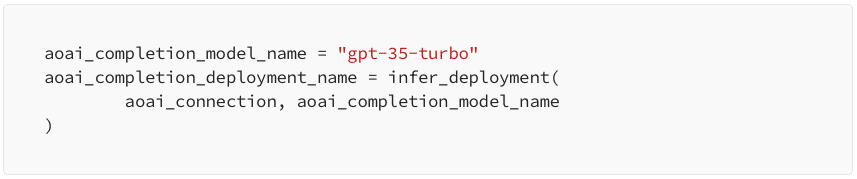

Deploy the GPT-3 model:

Step 4: Setup the SQL Datastore

Connecting to a SQL Datastore

We need a SQL datastore to retrieve from, for the sake of this example let’s create a generic one via AzureML.

- Go to the workspace in the Azure Portal.

- Click on Data -> Datastore -> + Create.

- Fill in the datastore form with the necessary details.

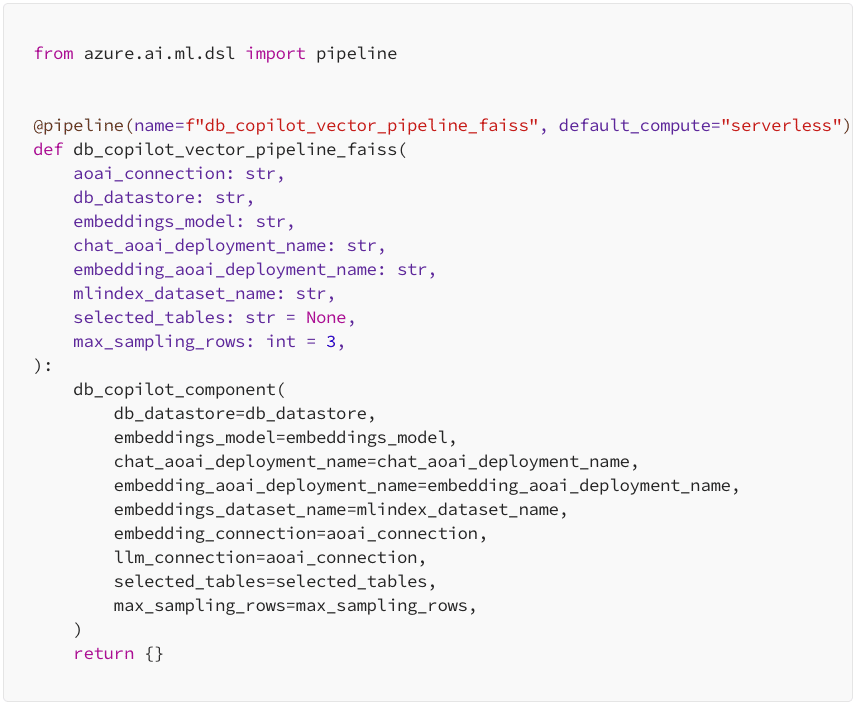

Step 5: Building and running the RAG Pipeline

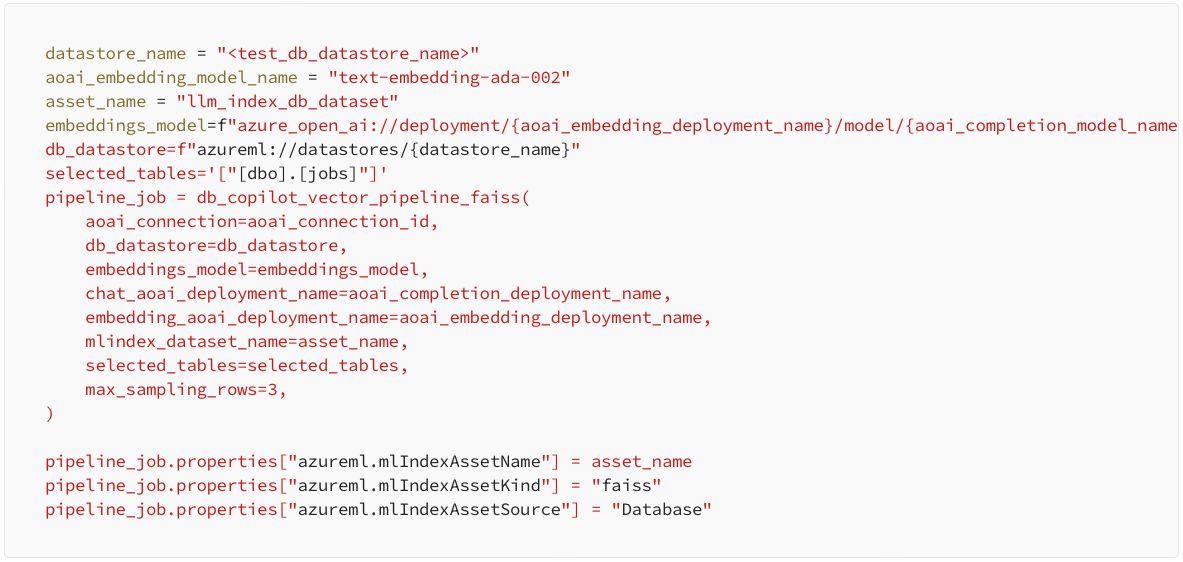

The core of our setup is the AzureML Pipeline. This pipeline grounds a database into a LangChain-compatible FAISS Vector Index, which can then be used as a DBCopilot chatbot. Retrieve the necessary components from the AzureML registry and define the pipeline. The pipeline involves:

Building a pipeline function around the component:

Defining the pipeline job:

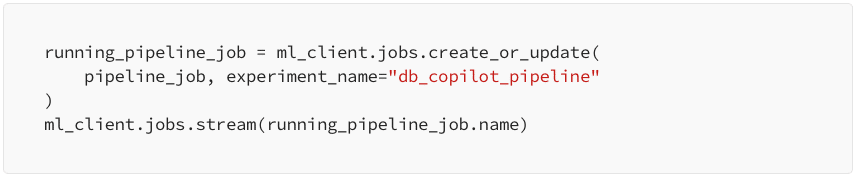

Running the pipeline job:

This article is available on Medium.

Part 2: A streamlined way to build LLM-based AI applications with RAG