Prompt Flow in Azure ML: exploring new features

Part 1: Industry-grade prompt management

Ben Burtenshaw

NLP Engineer

LLMs have emerged as a powerful tool for a myriad of applications. It’s clearly straightforward to rapidly prototype applications that would have been unimaginable a year ago. However, developing and deploying LLM-based AI applications can be a complex endeavour. Enter Azure Machine Learning prompt flow, a groundbreaking development tool that promises to revolutionize how we approach LLM-based AI application development. Prompt flow is integrated within Azure’s ML workspace, which is already a robust platform for machine learning.

Azure Machine Learning prompt flow isn’t just another development tool. It’s a comprehensive platform with LLM specific benefits. This post will introduce the key concepts and show how to set it up.

Prompt Flow Methodology

Azure, known for its vast array of feature-laden offerings, designs its products grounded in a specific methodology. Through my experience with offerings like Azure ML and Synapse, I realized that understanding the underlying philosophy of the product significantly enhances its usability.

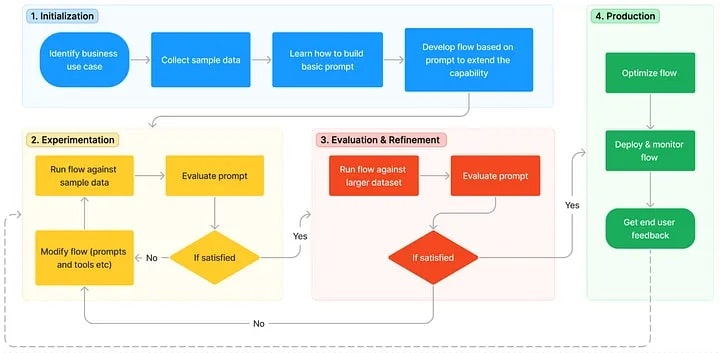

Azure splits prompt engineering into four phases; Initialisation, Experimentation, Evaluation / Refinement, and Production.

Initialization: Start by pinpointing the business requirement, aggregating sample data, and mastering the creation of a foundational prompt. Subsequently, evolve a flow to broaden its utility.

Experimentation: Test your flow using the sample data. Analyze the efficacy of the prompt and, if required, modify the flow. This stage may entail repeated testing to ensure the desired outcome is achieved.

Evaluation & Refinement: Test the flow with an expansive dataset to determine its reliability. Assess the efficacy of the prompt and make the necessary tweaks. If the outcomes align with your objectives, transition to the subsequent phase.

Production: Streamline the flow to ensure both efficiency and effectiveness. Once deployed, vigilantly monitor its performance in a real-world environment and collate data about its usage and feedback. Utilize these insights to enhance the flow and inform potential modifications in preceding stages.

Prompt Flow Entities

Prompt flow abstract the building blocks of a prompt engineering stack in a family of expected entities. In the following posts, I’ll connect these in examples.

Connections

Connections in Prompt flow link your application with remote APIs or data sources. They’re the guardians of vital info, like endpoints and secrets, ensuring everything communicates securely and efficiently.

Within the Azure Machine Learning workspace, you’ve got options. You can set up connections as shared resources or keep them private. And for those concerned about security, all secrets are safely stored in Azure Key Vault, meeting top-notch security standards. You can also build custom connections in Python.

Runtimes

Runtimes in Prompt flow are the compute resources that your application will use. They rely on Docker images that are loaded with all the tools you might need.

In the Azure Machine Learning workspace, there’s a default environment to kick things off, referencing the prebuilt Docker image. You can also customize and roll out your unique environment in the workspace, which will be a familiar feature to Azure ML users.

Prompt flow offers Managed Online Deployment Runtime and Compute Instance Runtime. The first offers a managed scalable solution that satisfies most applications but lacks some of the managed identity features. Under the hood, this abstracts Azure ML online endpoints. The compute instance runtime offers a more detailed definition but could be more horizontally scalable. This abstracts Azure ML compute instances.

Flows

Flows are the center piece of PromptFlow. They represent the foundational schema of your LLM-based AI application. This paradigm predominantly emphasizes orchestrating data movement and processing in a methodical and structured manner. They are the ‘pipeline’ of the prompt engineering methodology.

Central to the flow’s architecture are the nodes, each symbolizing a distinct utility tool. These nodes manage data, execute specific tasks, and administer algorithms. For aficionados of granular customization, the user interface has been meticulously crafted to cater to your needs. Its design is reminiscent of a technical notebook, facilitating easy adjustments to settings or direct interactions with underlying code. Furthermore, the pipeline-style DAG visualisation provides a panoramic perspective on the interrelationships between various components.

Tools

The tools provided within the Prompt flow implement explicit features that LLMs can utilise to perform tasks. Typically, collecting fresh data from the web, or performing operations that are not suited to language models. Such features are familiar to LangChain and LLamaIndex users.

According to the official documentation of PromptFlow.

“Tools are the foundational elements of a flow in Azure Machine Learning’s Prompt flow. They function as simple, executable entities, each honed for a particular task. This modular approach empowers developers to amalgamate these tools and carve out a flow tailored to diverse objectives.”

LLM Tool: Designed for custom prompt creation, the LLM Tool utilizes the capabilities of large language models for tasks like content summarization and automated customer responses.

Python Tool: This tool supports the development of custom Python functions, suitable for web scraping, data processing, and third-party API integration.

Prompt Tool: Primarily used for string-based prompt preparation, the Prompt Tool is optimized for complex scenarios and works seamlessly with other prompt and Python tools. For example, you could use this to process the output of your LLM to JSON.

A Word of Caution: It’s important to note that as of now, Prompt flow is still in its public preview phase. This means there’s no service-level agreement tied to it, making it less ideal for production-level tasks. Features are still being refined, and some may possess limited capabilities. I found that a number of the example Tools were less ready than other features.

Variants

In the world of Prompt flow, a variant is like a unique version of a tool node, each with its settings. Right now, this is a feature of the LLM tool. Think of it this way: if you’re trying to summarize a news article, you can play around with different prompt versions or connection settings. It’s all about finding the perfect blend for your needs.

Conclusion

Whether you’re a seasoned developer or just starting out in the world of AI, Azure Machine Learning prompt flow offers the tools, resources, and guidance to ensure that your LLM-based AI applications are a resounding success. Dive in and experience the future of AI development today!

Remember, Prompt flow is in public preview, so it might not be suitable for production workloads yet. Always refer to the official documentation for detailed steps and updates.

Using Prompt Flow

There’s already a handy guide on using PromptFlow on Microsoft Learn. I’ve condensed it here for ease. In the later posts, I’ll go into more detail with some tutorials.

References

- Ready to dive into the world of Azure Machine Learning prompt flow? Here are some resources to get you started:

- Get started in Prompt flow (preview) — Azure Machine Learning

- Learn how to use Prompt flow in Azure Machine Learning studio

- Prompt tool in Azure Machine Learning prompt flow (preview) — Azure Machine Learning

- LLM tool in Azure Machine Learning prompt flow (preview) — Azure Machine Learning

This article is available on Medium.

Part 1: Industry-grade prompt management